Here’s the route for today

👟 Topic: How I vibe code without touching my keyboard

👾 Get my AI notes: I’m documenting everything I learn about AI coding so you don’t have too. Get my personal notes below

TOGETHER WITH WISPR FLOW

Dictate prompts and tag files automatically

Stop typing reproductions and start vibing code. Wispr Flow captures your spoken debugging flow and turns it into structured bug reports, acceptance tests, and PR descriptions. Say a file name or variable out loud and Flow preserves it exactly, tags the correct file, and keeps inline code readable. Use voice to create Cursor and Warp prompts, call out a variable like user_id, and get copy you can paste straight into an issue or PR. The result is faster triage and fewer context gaps between engineers and QA. Learn how developers use voice-first workflows in our Vibe Coding article at wisprflow.ai. Try Wispr Flow for engineers.

TOPIC

How I vibe code without touching my keyboard

Quick SayMail update before we dive in:

The contact + email discovery feature is coming back online soon — I'm hoping by next week. Once it's live, I'll send out an update and open more early access spots.

Stay tuned.

Now, onto today's topic.

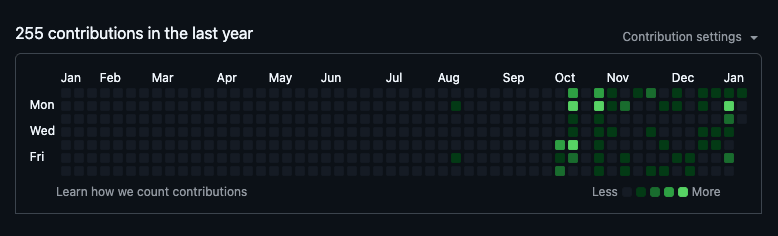

My GitHub contribution to date

It’s been 4 months since I started my AI building journey and along the way, some people have asked what my setup is. What tools do I use? How do I actually prompt Cursor?

Funny enough, it involves a tool similar to SayMail, a tool that uses your voice.

The setup (no secret sauce here)

My entire vibe coding environment consists of 4 things:

Cursor → my IDE where all the code lives

Claude Code → installed in the terminal, acts as the brain for operations

Separate LLM → a secondary AI model that lives outside Cursor

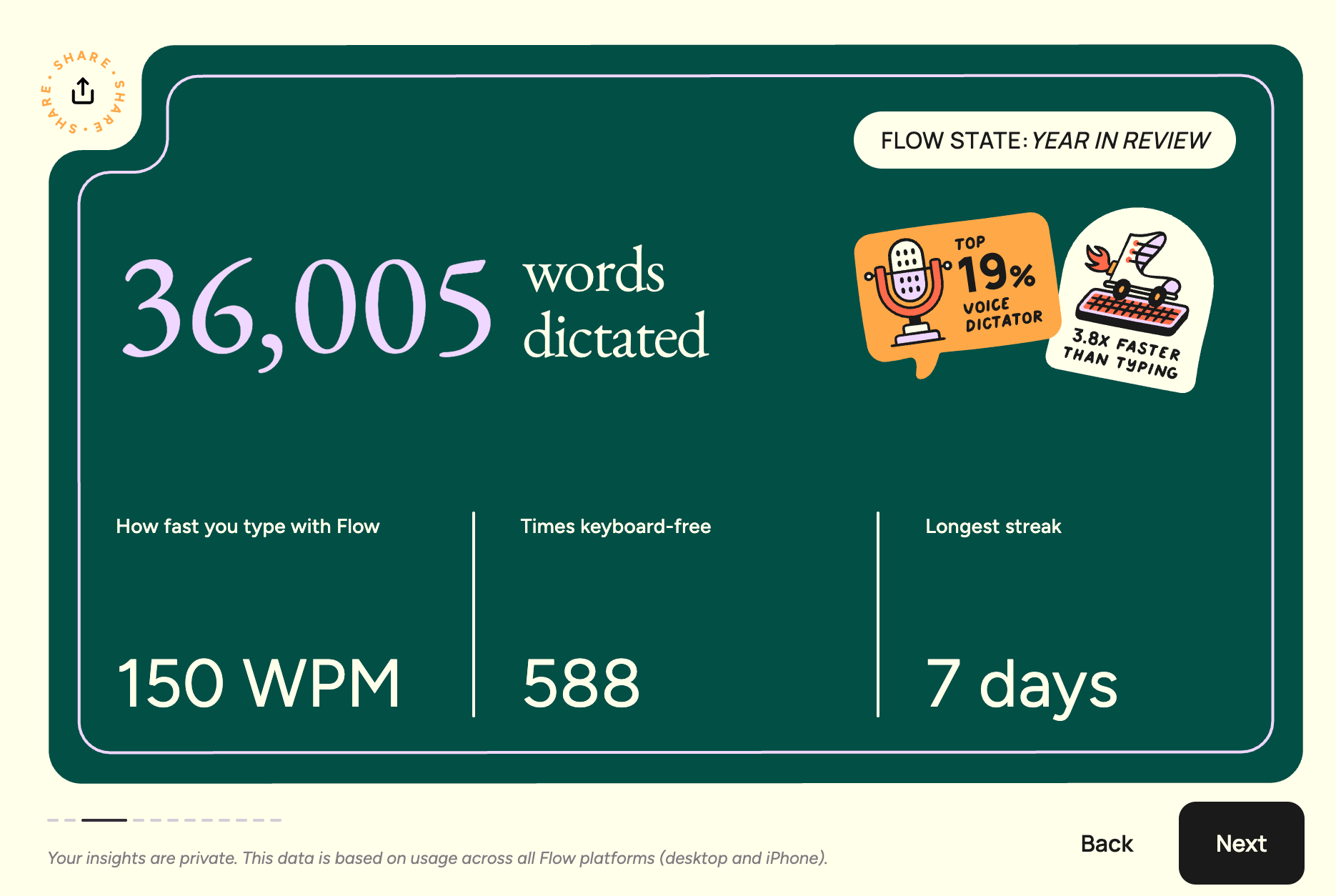

Wispr Flow → a voice dictation app that transcribes what I say in real-time

That's it. No fancy plugins. No complicated workflows.

Whenever I want to prompt Cursor, I hold down a key, speak what I want to say, and Wispr Flow transcribes it directly into my IDE.

*Ok I did clickbait the title since I do use my keyboard, but it’s only 1 key!

The speed at which I can build has 10x and I’ve been able to do this on their free plan, it comes with 2000 words a week which is more than enough for me.

But voice dictation is only half the equation.

Meta prompting: Making AI talk to AI

Here's the thing about vibe coding, we're not great at communicating with AI.

Which is ironic when you think about it, because who else would talk to AI?

Well... AI.

This is what I call meta-prompting: asking AI to write instructions for AI.

Whenever I have a complex feature to build, I don't try to one-shot the prompt myself. Instead, I open a separate Claude chat (not Claude Code, just the regular website) and let it do the heavy lifting.

Here's my exact process:

Open a separate chatbot — I use Claude, but ChatGPT works too

Explain what you want to build — describe the feature, your goals, edge cases to avoid

Ask it to generate a prompt — something like: "Help me write a prompt I can paste into Cursor to achieve this"

Review the output — make sure it covers everything you intended

Paste it into Cursor — and watch the magic happen

The first time I did this, the prompt Claude generated was insanely detailed.

I'm talking edge cases I never would have thought of, specific implementation notes, error handling suggestions, all things that would've taken me 30-60 minutes to write myself.

AI did it in seconds.

Since adopting this method, I rarely have to redo instructions. The prompts are just... better than what I could write on my own.

Snapshot of a prompt example

Why this works

The core insight here is simple: AI is better at talking to AI than we are.

When you explain a feature to Claude conversationally, it extracts the intent and translates it into precise, technical instructions that another AI can execute.

You stay in "thinking mode." The AI handles "instruction mode."

It's a small shift, but it's changed how I build.

Quick recap

If you want to try this yourself:

→ Voice dictation speeds up prompting by 10x (I use Wispr Flow, but use whatever works for you)

→ Meta-prompting produces better instructions than you could write manually

→ Separate your chats, one for planning, one for building

That's how I've been vibe coding SayMail.

That’s all for today. If you enjoyed this post, share it with a friend!

If they subscribe, I’ll send you my personal AI coding doc!

To refer, use your unique link.

See you next Tuesday 🤝

-Michael Ly

PRESENTED BY NEURONS

Creativity + Science = Ads that perform

Join award-winning strategist Babak Behrad and Neurons CEO Thomas Z. Ramsøy for a strategic, practical webinar on what actually drives high-impact advertising today. Learn how top campaigns capture attention, build memory, and create branding moments that stick. It’s all backed by neuroscience, and built for real-world creative teams.

REFERRALS

Share The Leap Sprint:

PDF guide

{{rp_personalized_text}}

Or copy and paste your unique link to them: {{rp_refer_url}}